How is data stored in random access memory (RAM) and cache

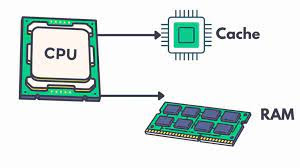

Random Access Memory (RAM) and cache are two types of computer memory used to store data that the computer is currently working with. The main difference between the two is their speed and capacity.

RAM is a type of volatile memory that stores data temporarily while the computer is running. It is called "random access" because the computer can access any part of it directly, regardless of where it is located. RAM is used to store the operating system, applications, and data that are currently being processed by the computer.

Data in RAM is stored in binary code, which is represented by electrical charges. The memory is divided into small units called "cells" or "bits," which can be turned on or off by the computer's processor to store and retrieve data. Each cell is connected to a circuit that enables it to communicate with the processor, allowing for fast access to data.

Cache memory, on the other hand, is a type of high-speed memory that stores frequently accessed data from RAM. It is much smaller than RAM, but much faster, and is used to improve the overall performance of the computer.

Data in cache memory is stored in a hierarchy of levels, with each level providing a progressively smaller but faster storage space. The highest level of cache is called "L1 cache," which is built into the processor itself, followed by "L2 cache" and "L3 cache" which are located on the motherboard. Data is stored in cache memory in blocks, which are loaded and unloaded as needed by the processor.

In summary, data in RAM and cache is stored in binary code and accessed directly by the processor. RAM is used to store data temporarily while the computer is running, while cache memory stores frequently accessed data from RAM to improve performance.

Comments